After creating my last Alexa skill that used Python and an API, I wanted to extend this by creating a skill that could massively vary it's response based upon what the user asked it. i.e. Didn't just do one thing or respond with one of a limited set of pre-defined responses.

The result is my Amazon Movie Expert skill which aims to be able to provide information on any movie (within reason). Best to see it in action first!

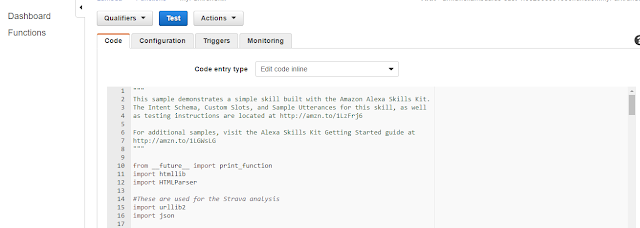

I don't go back to basics in this post about creating Alexa skills. Please look at one of my old posts or the tutorials on the interwebs for more on that.

A key point is that all the movie information comes from the Open Movie Database (OMDB) API which can be found here. All credit to the people who maintain that API. It's excellent!

The idea is that you're able to say something like "Alexa, ask movie expert about the movie Sing". To understand how Alexa interprets this, let's break down what was asked:

- "movie expert" is the invocation name that you configure.

- "about the movie" is the first part of the utterance and this is configured to map to a Alexa "intent". This basically points to a function in your Lambda handler.

- "sing" is called a slot. This is effectively a parameter that is passed to the Lambda handler.

So your utterances in the interaction model look something like this:

So the intent is MovieIntent and {MovieName} is your slot. This means the words spoken at the end of the utterance can be any movie name.

You then define the intent structure as:

So here we define that the MovieIntent intent has a slot called MovieName. We also say it's of type "Amazon.Movie". Slots seem to either be built-in or user defined custom slots where what the user can say is pre-defined by the developer. I think the built-in slot type of AMAZON.Movie tells Alexa to expect a movie name to be spoken and so narrows down the range of words Alexa must interpret, thus improving accuracy. There's a whole set of built-in slots for you to use.

This means that the movie name spoke at the end of the utterance is passed to the AWS Lambda function as a parameter. You then write a function to handle the request. Here's a laughably simple architecture diagram showing how it all fits together:

Below is the Python function that handles MovieIntent. Key points:

- Many attributes of the intent are passed to the function in the parameter "intent".

- You can assign the slot to a variable by accessing intent['slots']

- The function then forms a URL, passes it to the open movie database (OMDB) and captures the response

- The response is in JSON format. Key elements are extracted and form the string that Alexa reads back to the user.

#This is the main function to handle requests for movie information

def get_movie_info(intent, session):

card_title = intent['name']

session_attributes = {}

should_end_session = True

if 'MovieName' in intent['slots']:

#Get the slot information

MovieToGet = TurnToURL(intent['slots']['MovieName']['value'])

#Form the URL to use

URLToUse = OmdbApiUrl + MovieToGet + UrlEnding

print("This URL will be used: " + URLToUse)

try:

#Call the API

APIResponse = urllib2.urlopen(URLToUse).read()

#Get the JSON structure

MovieJSON = json.loads(APIResponse)

#Form the string to use

speech_output = "You asked for the movie " + MovieJSON["Title"] + ". " \

"It was release in " + MovieJSON["Year"] + ". " \

"It was directed by " + MovieJSON["Director"] + ". " \

"It starred " + MovieJSON["Actors"] + ". " \

"The plot is as follows: " + MovieJSON["Plot"] + ". " \

"Thank you for using the Movie Expert Skill. "

except:

speech_output = "I encountered a web error getting information about that movie. " \

"Please try again." \

"Thank you for using the Movie Expert Skill. "

else:

speech_output = "I encountered an error getting information about that movie. " \

"Please try again." \

"Thank you for using the Movie Expert Skill. "

reprompt_text = None

return build_response(session_attributes, build_speechlet_response(

card_title, speech_output, reprompt_text, should_end_session))

#Takes the information from the slot and turn it into the format for the URL which

#puts + signs between words

def TurnToURL(InSlot):

print(InSlot)

#Split the string into parts using the space character

SplitStr = InSlot.split()

OutStr = "" #Just initialise to avoid a reference before assignment error

#Take each component and add a + to the end

for SubStr in SplitStr:

OutStr = OutStr + SubStr + "+"

#Just trim the final + off as we don't need it

return OutStr[:-1]